The DevOps of Data Management: A Complete Guide to DevOps

Struggling with slow, unreliable data pipelines? Discover DevOps, the DevOps of data management. Learn principles, real-world use cases, best practices, and how to build robust data systems.

If you've spent any time in the world of software, you've felt the impact of DevOps. It’s the philosophy that broke down the walls between development and operations, giving us continuous integration, continuous delivery, and the ability to ship code faster and more reliably than ever before.

But for years, a parallel universe has been operating in a different, often more painful, reality: the world of data.

This is the world of the "data pipeline." A mysterious, often fragile, series of scripts, jobs, and processes that somehow transform raw, messy data into clean, structured reports and dashboards. It’s a world where:

A tiny change in a data source breaks a critical report for three days.

The "data team" is a black box, and business analysts wait weeks for a new data point.

No one is entirely sure if the numbers in the CEO’s dashboard are correct.

Testing a data transformation? That’s what the "production run" is for.

Sound familiar?

What if we could apply the same transformative principles of DevOps to this chaotic world of data? What if we could have continuous integration for our data models, continuous delivery for our analytics, and collaborative transparency between data engineers, scientists, and business users?

This isn't a "what if." This is the reality of DataOps—the DevOps of data management.

In this deep dive, we'll unpack everything you need to know about DataOps. We'll define it, explore its core principles, walk through real-world examples, and provide a blueprint for getting started. Let's bridge the gap between data promise and data reality.

What Exactly is DataOps? More Than Just a Buzzword

At its heart, DataOps is a collaborative data management practice focused on improving the communication, integration, and automation of data flows between data managers and data consumers across an organization.

Think of it as the cultural and technical bridge that connects data creators (engineers), data curators (scientists, analysts), and data consumers (business teams).

It’s not a single tool, nor is it a single job title. It's a methodology, a set of practices, and a culture. It takes the agile, lean, and DevOps principles that revolutionized software development and applies them to the entire data lifecycle.

ess, quality metrics. |

The Foundational Pillars of DevOps

To move from theory to practice, DevOps stands on four key pillars. Ignoring any one of them will leave your initiative wobbly.

1. Culture and Collaboration

This is the most critical and often the most challenging pillar. DevOps requires a fundamental shift in mindset.

From Silos to Teams: Data engineers can't work in isolation, throwing "finished" pipelines over the wall to data scientists. DevOps encourages cross-functional teams where data engineers, scientists, analysts, and even business stakeholders collaborate from the start.

Shared Responsibility: Everyone is responsible for data quality and pipeline health, not just the "last person who touched it."

Blameless Post-Mortems: When a pipeline breaks or data quality degrades, the goal is not to find a person to blame but to understand the systemic cause and prevent it from happening again.

2. Processes and Practices

This is where we operationalize the culture. These are the day-to-day activities that make DataOps work.

Agile for Data: Applying agile methodologies to data projects. This means working in short sprints, having daily stand-ups, and continuously delivering value in small, incremental chunks instead of massive, monolithic data warehouse projects.

Version Control for Everything: Not just for application code, but for your data pipeline code, SQL queries, data models, and even configuration files (like Dockerfiles and Kubernetes manifests). Git is your single source of truth.

Continuous Everything:

Continuous Integration (CI): Automatically building and testing your data pipeline code whenever a change is committed to version control. This includes testing data transformations and validating schema changes.

Continuous Delivery (CD): Automatically deploying your validated data pipelines to staging and production environments.

Data Orchestration: Using tools like Apache Airflow, Prefect, or Dagster to define, schedule, and monitor your data workflows as directed acyclic graphs (DAGs). This makes dependencies explicit and failures easy to trace.

Data Monitoring and Observability: It's not enough to know if a pipeline ran; you need to know if it ran correctly. This means monitoring data freshness (is the data on time?), data quality (are the values within expected ranges?), and data lineage (where did this number come from?).

3. Technology and Tools

The tools are the enablers. You don't need every tool, but you need a stack that supports the practices above.

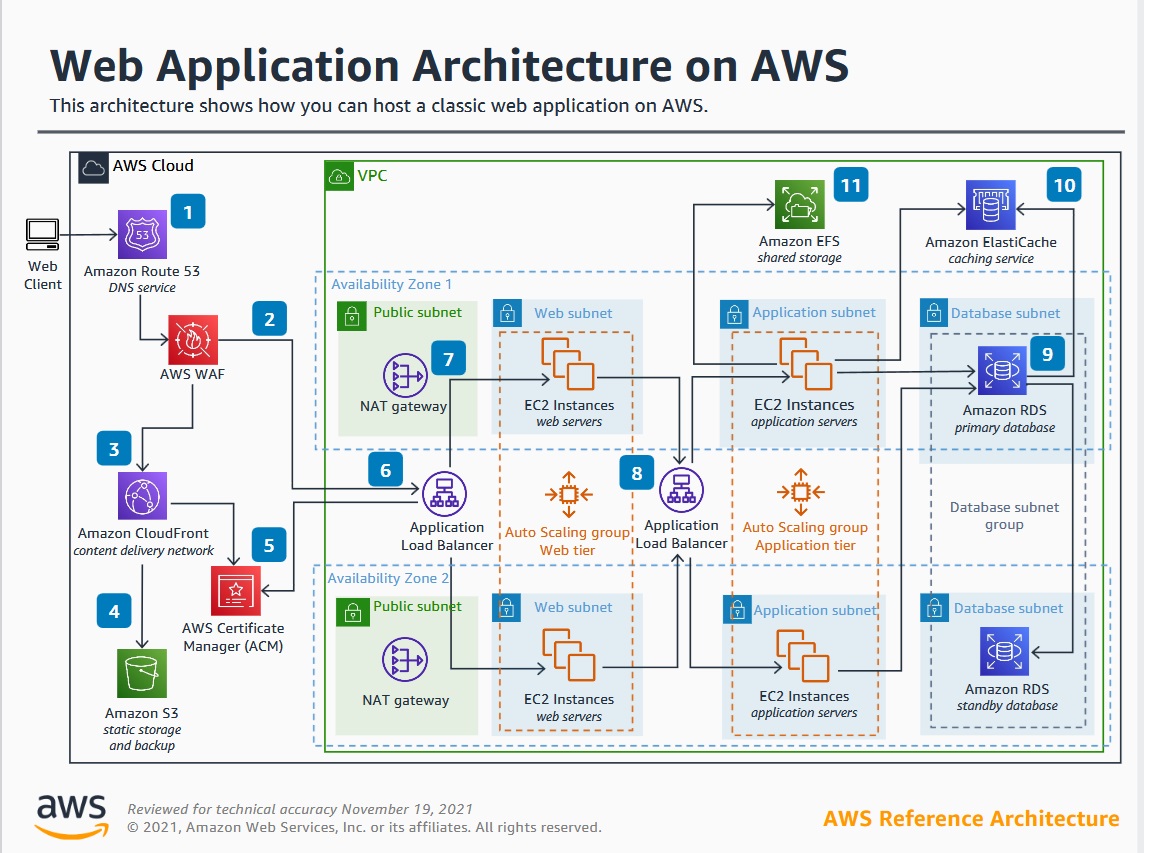

Orchestration: Apache Airflow, Prefect, Dagster, AWS Step Functions.

Version Control: Git (GitHub, GitLab, Bitbucket).

CI/CD: Jenkins, GitLab CI/CD, GitHub Actions, CircleCI.

Infrastructure as Code (IaC): Terraform, AWS CloudFormation, Ansible to provision the underlying data infrastructure (databases, clusters, storage) in a repeatable, version-controlled way.

Containerization: Docker to package pipeline components, and Kubernetes to orchestrate them, ensuring consistency from a developer's laptop to production.

Data Testing & Quality: Great Expectations, dbt (data build tool) tests, Soda SQL.

Monitoring: Datadog, Prometheus/Grafana, and custom dashboards built from pipeline metadata.

DataOps in Action: A Real-World Use Case

Let's make this concrete. Imagine "Global E-Commerce Corp." Their old, fragile data pipeline looked like this:

Extract: A nightly, monolithic SQL script dumps data from their production OLTP database.

Transform: A complex, 10,000-line Python script runs on a single, large server, trying to clean, join, and aggregate the data. It frequently runs out of memory and fails.

Load: If it succeeds, it loads the data into a data warehouse. Business analysts then build Tableau dashboards from this data.

The Problem: The process takes 12 hours. If it fails at 3 AM, a data engineer gets paged, spends 4 hours debugging, and the business doesn't have its daily data until noon. The CEO asks, "Why are we always looking at yesterday's data from yesterday?"

Implementing DataOps, they redesign the pipeline:

Culture & Team: They form a "Customer Insights" squad with two data engineers, a data scientist, and a business analyst.

Process & Technology:

Version Control: All pipeline code, SQL, and Airflow DAGs are stored in Git.

CI/CD: On every Git commit, GitHub Actions kicks off a pipeline that:

Builds a Docker image with the transformation logic.

Runs a suite of tests using

pytestandGreat Expectations(e.g., "customer_id should never be null," "revenue should be positive").If tests pass, it deploys the new Docker image to a container registry.

Orchestration: Apache Airflow now manages the workflow. It doesn't run one big script. Instead, it runs smaller, interdependent tasks:

Task 1: Extract new data incrementally (using timestamps).

Task 2: Run data quality checks on the raw extract.

Task 3: Transform customer data.

Task 4: Transform order data.

Task 5: Join customer and order data.

Each task is its own container, making it scalable and resilient.

Monitoring: A Grafana dashboard shows real-time metrics: Pipeline run status, data freshness (now only 15 minutes behind!), and quality check pass/fail rates.

The Result: The pipeline now runs every hour, not every day. Failures are isolated to a single task and can often auto-retry. The business has near-real-time insights, and the data team spends less time firefighting and more time building new features. The business analyst, empowered by dbt, can even make and test simple data model changes themselves via a Git pull request.

Best Practices for a Successful DataOps Implementation

Ready to start your journey? Don't try to boil the ocean. Start small and iterate.

Start with a Single, High-Impact Pipeline: Choose a pipeline that is both valuable to the business and notoriously painful. Use it as your pilot project to prove the value of DataOps.

Treat Data as a Product: Shift your thinking. The consumers of your data (analysts, scientists, applications) are your customers. Your goal is to provide them with a high-quality, reliable, and easy-to-use data product.

Implement Data Quality Checks from Day One: Don't wait until the end. Embed data quality checks (for freshness, volume, schema, and value validity) at every stage of your pipeline.

Embrace Metadata and Data Lineage: Use tools to automatically track the flow of data. When a CEO questions a number in a dashboard, you should be able to trace it back through every transformation to its source in minutes, not days.

Automate Your Infrastructure: Use Terraform or CloudFormation to manage your data infrastructure. This makes environments reproducible, eliminates configuration drift, and allows you to easily spin up test environments.

Focus on the Developer Experience: Make it easy for your data team to develop and test locally. Use Docker Compose to mimic production dependencies. A happy, efficient data team builds better pipelines.

Building the skills to implement these practices is crucial. A strong foundation in software engineering principles is what separates a traditional data professional from a DataOps practitioner. To learn professional software development courses such as Python Programming, Full Stack Development, and MERN Stack, which provide the essential building blocks for modern data engineering, visit and enroll today at codercrafter.in.

Frequently Asked Questions (FAQs) About DevOps

Q1: Is DevOps just DevOps for data engineers?

While the principles are similar, the execution is different. DataOps deals with unique challenges like data quality, large-volume processing, and the inherently stateful nature of data, which pure application DevOps doesn't typically face.

Q2: How is DevOps different from Data Governance?

They are complementary. Data Governance defines the policies—"What data is sensitive?" "Who can access it?" "What does 'customer revenue' officially mean?". DevOps is the execution—it implements those policies automatically in the pipelines (e.g., automatically masking PII) and ensures the defined metrics are delivered reliably.

Q3: Do I need to be using the cloud to do DevOps ?

While the cloud (AWS, Azure, GCP) provides the elastic, API-driven infrastructure that makes DevOps dramatically easier, the principles can be applied on-premise. However, the tooling and scalability are inherently better in the cloud.

Q4: We're a small team. Is DevOps overkill for us?

Not at all! In fact, small teams benefit the most. The automation and reliability built into Data Ops prevent you from being overwhelmed by manual support tasks as you grow. Starting with just version control and a simple CI/CD pipeline for your scripts is a fantastic first step.

Q5: What's the role of a Data Scientist in a DevOps culture?

They become active contributors to the data product, not just consumers. They might write and version-control their own feature transformation logic, contribute to data quality tests for their models, and use the same orchestration tools to productionize their machine learning models (a practice often called ML Ops, which is a subset of DevOps ).

Conclusion: From Chaos to Confidence

The era of the fragile, black-box data pipeline is ending. The business demand for timely, trustworthy data is simply too high. DataOps isn't a magic bullet, but it is a proven roadmap out of the chaos.

It’s a journey that starts with a cultural commitment to collaboration and quality, is enabled by modern processes like CI/CD and orchestration, and is powered by a robust toolchain. By embracing DevOps , you stop being a team that reacts to broken pipelines and start being a team that proactively delivers data as a strategic, reliable asset.

The transition requires new skills—a blend of data engineering and software engineering. It’s about writing not just queries, but production-grade, testable, and maintainable code. If you're looking to build that skillset from the ground up, a solid understanding of core software development is non-negotiable. To learn professional software development courses such as Python Programming, Full Stack Development, and MERN Stack, visit and enroll today at codercrafter.in.