Machine Learning Integration: Your No-BS Guide to Making AI Work in Real Apps

Tired of just theory? Learn how to integrate ML models into real-world applications. Covers APIs, deployment, best practices, and real use cases. Turn your ideas into intelligent software.

Beyond the Hype: Your Practical Guide to Integrating Machine Learning into Real Applications

Let's be real. We've all seen the flashy headlines. "AI REVOLUTIONIZES EVERYTHING!" "BUILD A SELF-DRIVING CAR IN 10 LINES OF CODE!" It’s easy to get caught up in the magic of training a model that can identify a cat with 99% accuracy.

But here's the secret sauce that nobody talks about in those viral tweets: That model is utterly useless sitting alone on your laptop.

The real magic, the part that actually creates value and "revolutionizes" things, happens when you seamlessly integrate that model into a living, breathing application. It's the difference between a scientist's petri dish and a life-saving vaccine distributed worldwide.

So, if you're tired of just building models in a Jupyter notebook and want to learn how to actually make them do something, you're in the right place. This is your no-fluff guide to integrating Machine Learning models.

First Off, What Do We Even Mean by "Integrating ML Models"?

In simple terms, it’s the process of connecting a trained ML model to a software application so that the application can use the model's predictions to make decisions or provide intelligent features.

Think of it like this:

The ML Model: The brain. It's been trained, it's smart, it can make predictions.

Your Application: The body. It's the website, the mobile app, the server that users interact with.

Integration is the nervous system that connects the brain to the body. Without it, the brain is just a lump of grey matter with no way to affect the world.

The "How": Main Methods for Integration

There isn't a one-size-fits-all approach. The best method depends on your app's needs, the model's complexity, and your infrastructure. Let's break down the most common ones.

1. The In-App Workhorse: Embedded Models

This is where you package the model directly inside your application code. It runs on the same server or even on the user's device.

How it works: You save your trained model (e.g., as a

.pklfile in Python or a.joblibfile) and load it into your application's memory. When you need a prediction, you call the model directly from your code.Pros:

Blazing Fast: No network calls means super low latency.

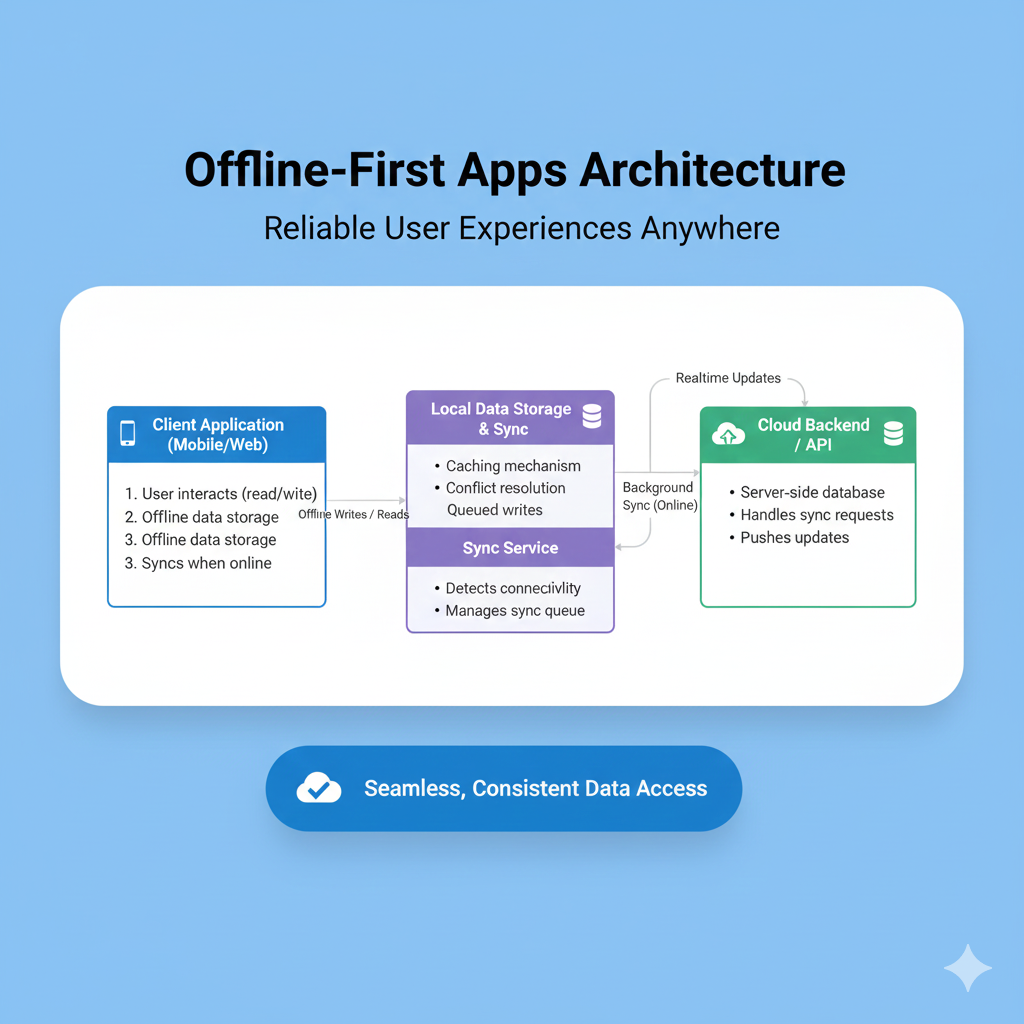

Offline Functionality: Perfect for mobile apps that need to work without an internet connection.

Cons:

App Bloat: Your app's size can balloon, especially with large models.

Update Hell: To update the model, you have to push a whole new version of your app.

Real-World Example: A photo-editing app on your phone that uses an ML model for background blur or style filters. The model lives right on your device.

2. The Gold Standard: Microservices & APIs

This is the most common and scalable approach for web applications. You deploy your model as a separate service, and your main application talks to it via an API (Application Programming Interface), usually a REST API.

How it works: You wrap your model in a small, dedicated web server using frameworks like Flask or FastAPI in Python. This server has one main job: listen for requests containing input data, run it through the model, and send back the prediction.

Pros:

Scalability: You can scale your model server independently of your main app. If predictions are in high demand, just spin up more model servers.

Tech Flexibility: Your main app can be in JavaScript (Node.js), Java, C#, etc., and it can still use a model written in Python.

Easy Updates: You can retrain and redeploy your model without touching the main application.

Cons:

Network Latency: The round-trip time for the API call adds a delay.

Complexity: You now have one more service to manage, monitor, and secure.

Real-World Example: Nearly every major service. When you type a message in Gmail and it suggests a quick reply ("Sounds good!" "Thanks!"), your text is being sent to a Google ML model via an API, which returns the suggested responses.

3. The Power User's Playground: Cloud-Based ML Platforms

Why build the infrastructure yourself when cloud giants have already done it for you? Services like Google Cloud AI Platform, AWS SageMaker, and Azure Machine Learning provide end-to-end tools to train, deploy, and manage models.

How it works: You upload your trained model to the platform, which handles everything—deployment, scaling, and providing you with an API endpoint. It's the "deploy model as a service" button.

Pros:

Zero DevOps: No server management. It's all handled by the cloud provider.

Built-in Tools: Comes with monitoring, versioning, and logging out-of-the-box.

Cons:

Cost: Can become expensive, especially with high traffic.

Vendor Lock-in: You're tied to that specific cloud provider's ecosystem.

Real-World Example: A startup that wants to add sentiment analysis to its customer support tickets but doesn't have a dedicated ML infrastructure team. They can quickly train a model and deploy it on SageMaker in hours.

The Real-World Glow-Up: Use Cases That Matter

Let's move from theory to practice. Here’s how integration makes apps smarter:

E-commerce & Streaming: That "Recommended for You" section on Netflix or Amazon? It’s not magic. It's an ML model, integrated via APIs, analyzing your watching/purchasing history in real-time and serving personalized suggestions.

Finance & Fraud Detection: When your bank texts you about a suspicious transaction, an integrated ML model has just analyzed the payment pattern, location, and amount in milliseconds and flagged it as anomalous.

Healthcare: Apps can now integrate models that analyze skin photos for potential melanoma risk or scan X-ray images for fractures, providing instant preliminary assessments.

Social Media: The content on your Instagram or TikTok feed is curated by sophisticated ML models that decide what to show you next to maximize engagement.

Best Practices: Don't Be a "Move Fast and Break Things" Dev

Integrating ML is messy. Here’s how to do it right.

Start Simple, Then Scale: Don't try to build a distributed ML system on day one. A simple Flask API is a perfect starting point.

Version Everything: Version your model files, your training data, and your code. You will need to roll back when a new model performs worse.

Monitor, Monitor, Monitor: A software bug throws an error. A silent ML model failure gives you wrong, but seemingly valid, predictions. Monitor for data drift (when real-world data changes from your training data) and concept drift (when the patterns you're predicting change).

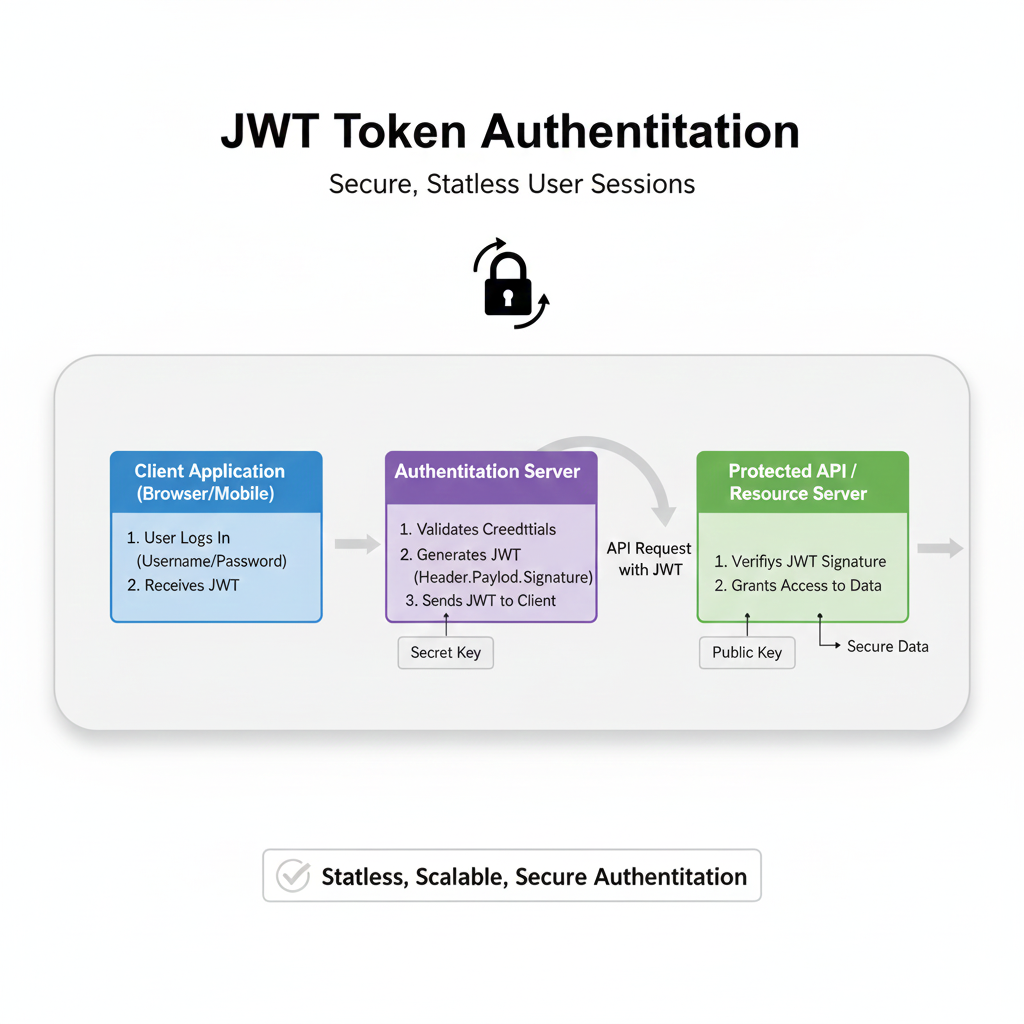

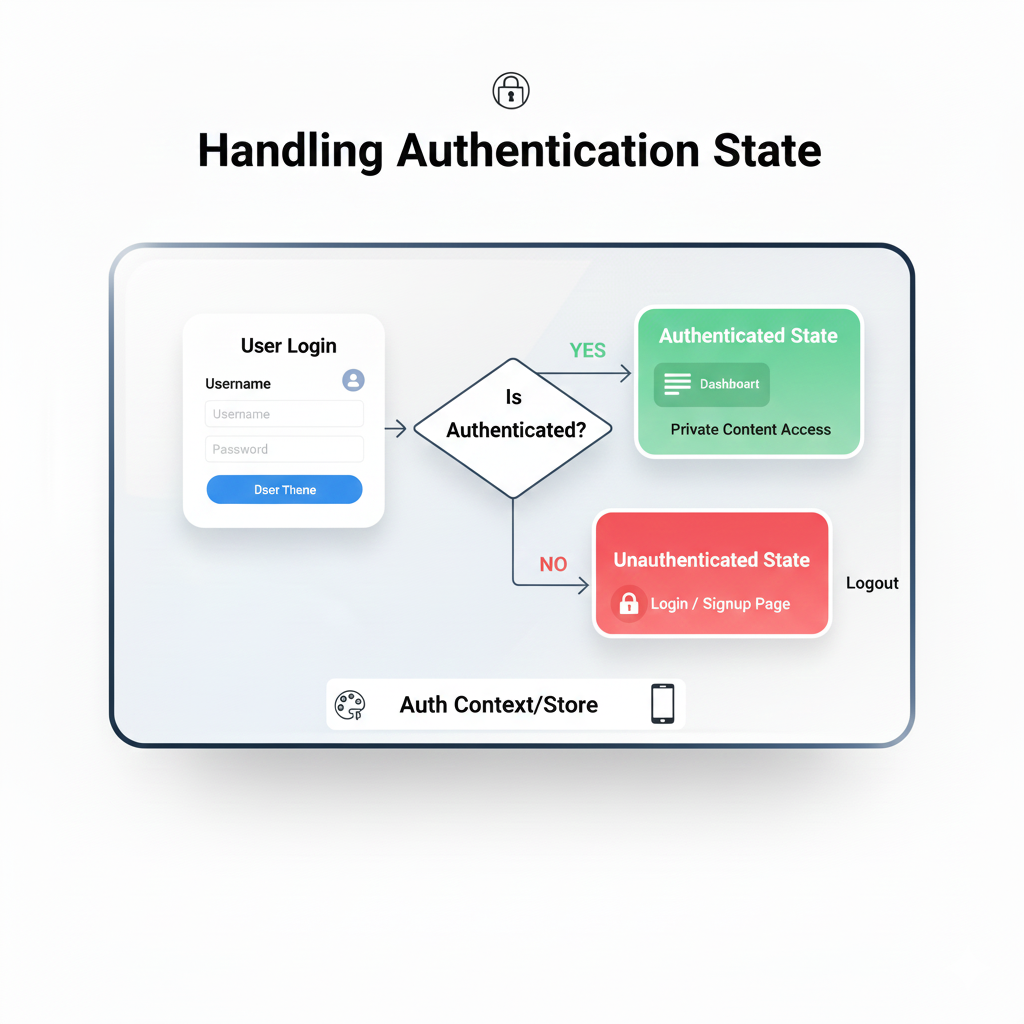

Security is Non-Negotiable: Your model API is a gateway to your system. Use authentication (API keys, tokens) and validate all input data to prevent malicious attacks.

Plan for Failure: What happens if your model server goes down? Your API should have graceful fallbacks, like returning a default value or a friendly error message, instead of just crashing the whole app.

Building these robust, production-ready systems requires a solid foundation in both backend development and ML principles. It's the core of what modern software engineering is becoming. To learn professional software development courses such as Python Programming, Full Stack Development, and MERN Stack, which give you the skills to build and integrate systems like these, visit and enroll today at codercrafter.in.

FAQs: Your Burning Questions, Answered

Q1: I'm a frontend developer. Do I need to know the deep math behind ML to integrate a model?

A: Not really! You mainly need to understand how to make an API call. The ML team will provide you with the API endpoint, the expected input format (e.g., a JSON object with specific fields), and the structure of the output. Your job is to send the data and handle the response.

Q2: What's the biggest challenge in ML integration?

A: Often, it's not the code—it's the data. Ensuring that the data you send to the model in production is preprocessed exactly the same way as the training data was. A tiny mismatch can lead to garbage predictions.

Q3: Can I integrate an ML model into a mobile app?

A: Absolutely! You have two choices: 1) On-Device: Use frameworks like TensorFlow Lite or PyTorch Mobile to run a lightweight version of the model directly on the phone. 2) Cloud API: Have your app send data to your model API in the cloud and receive the prediction.

Q4: How do I know if my integrated model is still accurate over time?

A: This is the key to MLOps (Machine Learning Operations). You set up continuous monitoring to track the model's performance metrics on live data. If you see a significant drop, it's a signal that the model needs to be retrained with new data.

Conclusion: Stop Just Building Brains. Start Building Nervous Systems.

The journey from a cool model in a notebook to a feature that impacts users is the most critical—and often overlooked—part of the AI/ML lifecycle. It requires you to think like both a data scientist and a software engineer.

It's about building bridges, not just islands of intelligence. By understanding the methods, best practices, and real-world applications of ML integration, you position yourself at the forefront of modern software development.

This fusion of development and intelligence is the future. Ready to build it? The journey to becoming a developer who can craft these intelligent systems starts with the right skills. Explore our project-based, industry-aligned courses in Full Stack Development and Python at codercrafter.in and let's start building.