JavaScript Numbers: A Human's Guide to the Quirks and Power

Tired of confusing number quirks in JavaScript? Let's break down integers, floats, NaN, and the infamous 0.1 + 0.2 !== 0.3 in a friendly, practical way.

Let's talk about numbers. You know, those things we use to count likes, calculate cart totals, and animate sliding menus. In JavaScript, numbers seem simple on the surface. You type let price = 19.99; and just… move on.

But then, one day, you run a simple check in your console:

javascript

console.log(0.1 + 0.2 === 0.3); // falseAnd your world shatters a little. What do you mean, false? Is my math broken? Is JavaScript broken?

Take a deep breath. It’s not you, and it’s not (entirely) JavaScript. It’s just computers being computers. Understanding how JavaScript handles numbers under the hood is one of those small steps that separates a beginner from a confident developer. So, let's pull back the curtain together.

The One Number Type to Rule Them All

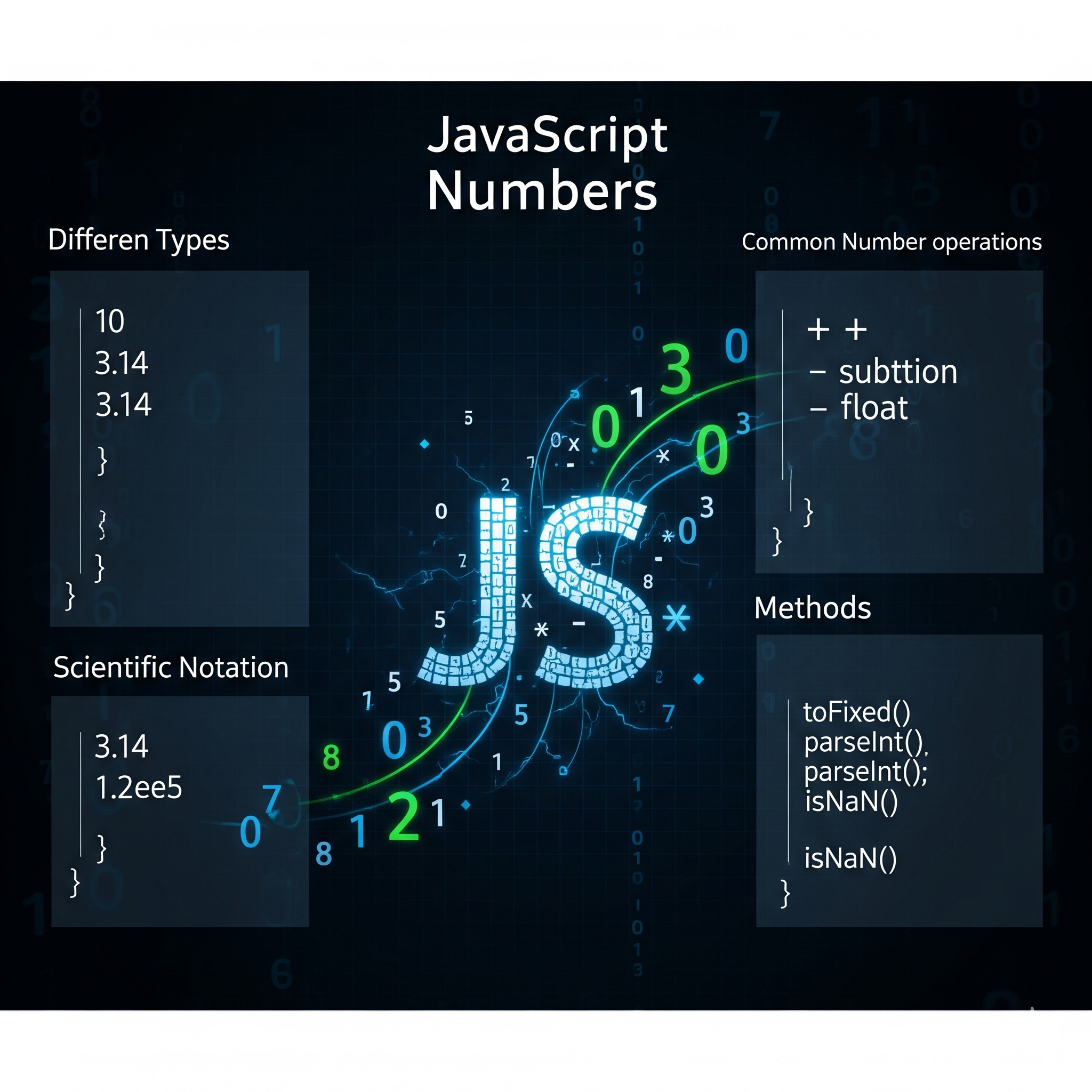

Unlike some other languages (looking at you, Java and C#), JavaScript is pretty laid-back about its numbers. It doesn’t have integers, floats, doubles, or decimals. It just has one type: the Number.

Every number you’ll ever use—from 42 to 3.14159—is represented as a double-precision 64-bit floating-point number. That’s a mouthful. Just think of it as a "decimal number," even when it looks like a whole number.

This simplicity is great! You don't need to declare a type. But this one-type-fits-all approach is also the source of its famous quirks.

The Classic "0.1 + 0.2" Problem

Back to our emotional support crisis. Why does this happen?

javascript

let result = 0.1 + 0.2;

console.log(result); // 0.30000000000000004The answer lies in how computers represent numbers in binary (base-2). We humans think in base-10. The number 0.1 is a nice, clean fraction in base-10 (1/10), but in base-2, it becomes an infinitely repeating number, like trying to write 1/3 as a decimal (0.3333...) in base-10.

JavaScript's Number type has to cut this infinite number off somewhere (at 64 bits), leading to a tiny precision error. When you add 0.1 and 0.2, you're actually adding two slightly imprecise numbers, and the result is also slightly imprecise.

The solution? Never use simple equality (===) for decimal arithmetic. Instead, you can:

Round the result to a sensible number of decimal places for display:

javascript

let result = 0.1 + 0.2; console.log(result.toFixed(2)); // "0.30"Check if numbers are close enough (within a margin of error) for logic:

javascript

let areEqual = Math.abs(0.3 - (0.1 + 0.2)) < Number.EPSILON; console.log(areEqual); // true

Meet the Special Guests: NaN, Infinity, and -Infinity

The Number type has a few special members in its family.

NaN (Not-a-Number)

This is JavaScript's way of saying, "I tried to do a math operation, and it completely failed." A classic example:

javascript

console.log("hello" / 5); // NaNThe key thing to remember about NaN? It's the only value in JavaScript that is not equal to itself.

javascript

console.log(NaN === NaN); // falseAlways use Number.isNaN() or isNaN() to check for it.

Infinity and -Infinity

These show up when you try to divide by zero or calculate a number larger than JavaScript can handle (~1.8e308).

javascript

console.log(1 / 0); // Infinity

console.log(-1 / 0); // -InfinityWorking With Numbers in the Real World

So, with all these quirks, how do we function day-to-day? Here are a few practical tips:

Converting Strings: Prefer

Number("123")or the unary+operator (+"123") overparseInt()for simple conversions, unless you need to parse a number from within a string (parseInt("123px")).Checking for Validity: Use

Number.isInteger()ortypeof value === 'number'to check if something is a valid number before doing math on it.For Sensitive Calculations (Finance!): Consider using a library like Big.js or representing money as cents (integers) to avoid floating-point errors entirely.

$10.50becomes1050cents in your code.

The Takeaway: It's a Feature, Not a Bug

JavaScript's number system is a trade-off. It offers immense simplicity and speed for a vast range of problems, from video games to web apps. The "weird" parts are a byproduct of following an international standard (IEEE 754) that the entire computing world uses.

Don't be afraid of numbers. Just understand their personality. They're powerful, mostly predictable, but with a few quirks you need to know about. And now, you do.

Next time you see 0.30000000000000004, you can just nod and say, "Ah, yes. I know you." And then you'll know exactly how to handle it.

What's your favorite JavaScript number quirk? Have you ever been bitten by one in production? Share your stories in the comments below!